There can be multiple bands inside one raster. We usually want to obtain values from DB. I have wondered, which solution is faster:

- Single raster with (N) bands => I will have (1) row for every data

- Single raster with (1) band => I will have (N) rows (one for every data)

I have written simple test, where I select 100 values from single raster at random positions [x, y] from rasters.

My raster data have size 720x360.

I have created table with two rows, first row has (1) band data, second (N) bands (where N = 6).

- Selecting data from (1) band in (N) band raster - 240 ms

- Selecting data from (1) band in (1) band raster - 70 ms

I have tried to use gist index for raster data

CREATE INDEX rast_gist_idx ON raster_data_table USING GIST (ST_ConvexHull(rast));

but results were the same.

In both test, I have run the single query to obtain one result at a time. What if we try to select multiple values in one row

(

SE).

WITH pairs(x,y) AS (

VALUES (x1, y1), (x2, y2) ... other points ...

)

SELECT

x, y,

ST_Value(rast, 1, x, y) AS val,

... other bands ...

FROM

raster_data_table

CROSS JOIN pairs

WHERE ....

I have run this query for 100 random points at positions [x, y] (same raster data as before).

- Selecting data from (1) band in (1) band raster - one query - 40 ms

- Selecting data from (1) band in (1) band raster - single query for each [x, y] - 67 ms

- Selecting data from (1) band in (N) band raster - one query - 223 ms

- Selecting data from (1) band in (N) band raster - single query for each [x, y] - 252 ms

- Selecting data from (N) bands in (N) band raster - one query - 1332 ms

- Selecting data from (N) bands in (N) band raster - single query for each [x, y] - 1390 ms

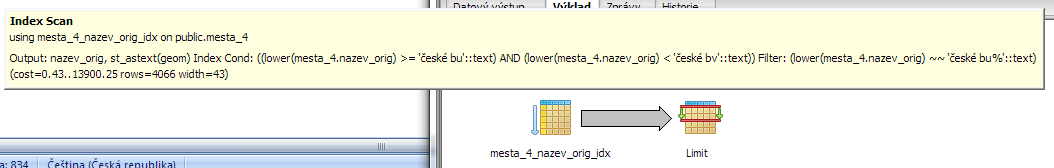

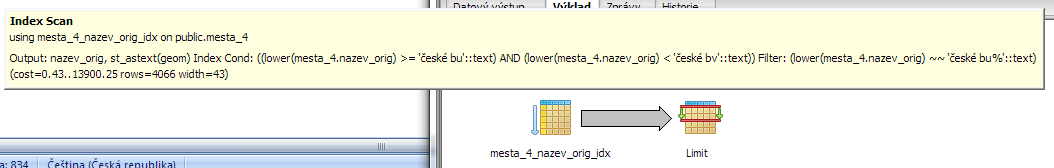

If you create an index, it does not automatically mean, that it will be used. Sometimes, DB may decide to use sequential scan instead, because it will be faster

(eg. you will return about 90% of all rows).

But to be sure, that index is used even for small groups of result, you can use EXPLAIN. Assuming, you are using PgAdmin III and run query from it, it can be

run via F7.

If we want to use

UNACCENT, we need to install it as extension:

CREATE EXTENSION unaccent;

We will build index:

CREATE INDEX cities_name_idx

ON cities

USING btree

(LOWER(name) COLLATE pg_catalog."C");

Note: COLLATE can have

C,

POSIX or

default.

C and

POSIX are using ANSI C sorting of characters. This is quite OK for our solution, since we

want to search UNACCENT characters.

If I have used

default I have no indexing at all.

Assume this query:

SELECT name

FROM cities

WHERE LOWER(name) LIKE 'české bu%'

LIMIT 2

But if we use this:

SELECT name

FROM cities

WHERE UNACCENT(LOWER(name)) LIKE 'ceske bu%'

LIMIT 2

Time: 1562ms

No indexing is used this time and sequential search is used. This is because we are searching over

UNACCENT(LOWER(...)).

Problem is that

UNACCENT can

not be used as part of the btree index, since it is not IMMUTABLE. Postgres only accepts IMMUTABLE functions for indexes

(If a function can return a different result for the same input, the index could silently break)!

Solution (based on

SO):

First create your own function:

CREATE OR REPLACE FUNCTION unaccent_lower(text)

RETURNS text AS

$func$

SELECT unaccent('unaccent', lower($1))

$func$ LANGUAGE sql IMMUTABLE SET search_path = public, pg_temp;

Use this function to create index:

CREATE INDEX cities_name_idx

ON cities

USING btree

(unaccent_lower(name) COLLATE pg_catalog."POSIX");

Finally, use this in your query:

SELECT name

FROM cities

WHERE unaccent_lower(name) LIKE 'ceske bu%'

LIMIT 2

Time: 11ms

Second solution, maybe more straightforward, is to add a new column

name_search with data copy

UPDATE cities SET name_search = UNACCENT(LOWER(name))

and a classic index

CREATE INDEX cities_name_search_idx

ON cities

USING btree

(name_search COLLATE pg_catalog."POSIX");

and use a classic SQL search without the need for function calls. The searched string is "unaccent_lower" outside the database, eg. in a script via PHP.

The timing is also a little faster than with created function.

We can store raster data in PostGIS. It is nice and works. The problem is searching. If raster is of a bigger size (bigger is meant something 200px in one dimension),

there is a problem witch searching and other operations. For this, is better to split data into tiles and create index to speed up searching.

So far so good. But what if we want to update tiles, after we have updated the original raster?

For this, I am using this query:

UPDATE t SET raw_data = f.raw_data_tile

FROM (

SELECT id, ST_Tile(raw_data, , ) As raw_data_tile

FROM m

WHERE

) as f

WHERE t.id = f.id

AND ST_UpperLeftX(t.raw_data) = ST_UpperLeftX(f.raw_data_tile)

AND ST_UpperLeftY(t.raw_data) = ST_UpperLeftY(f.raw_data_tile)

The important part is matching existing tiles to the newly created tiles via

ST_UpperLeftX(t.raw_data) = ST_UpperLeftX(f.raw_data_tile)

. Each tile contains information about its top (upper) left corner and we are matching those.

The problem with this query is its speed. It is not the fastest UPDATE and running it in "real-time" can be quite expensive.